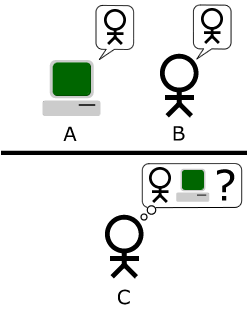

| The "standard interpretation" of the Turing Test, in which player C, the interrogator, is tasked with trying to determine which player - A or B - is a computer and which is a human. The interrogator is limited to only using the responses to written questions in order to make the determination. (Photo credit: Wikipedia) |

Ever since 1950, one of the most popular measuring sticks of artificial intelligence has been the Turing test — named after mathematician Alan Turing. The idea is that a program with some kind of artificial intelligence should be able to use text-based chatting to convince more than 30 percent of people that it's a human being. In June 2014, researchers claimed that a chatbot named Eugene Goostman did just that.

Nowadays, however, many experts are questioning whether the Turing test is really the best test. A computer tricking people into thinking that it's a 13-year-old is definitely an achievement — but it's not necessarily the ideal display of true, humanlike thought.

So what would be a better test for artificial intelligence? One front-runner is an exam that relies on common sense. Specifically the test is of something called Winograd schemas. Because Winograd schemas rely on cultural knowledge, they're super easy for people and difficult for computers.

How to test computers for common sense

The test would take the form of a multiple-choice quiz of reading comprehension. But the text itself would have some very specific features. It would consist of Winograd schemas: pairs of sentences whose intended meaning can be flipped by changing just one word. They generally involve unclear pronouns or possessives. A famous example comes from Stanford computer scientist Terry Winograd:

- "The city councilmen refused the demonstrators a permit because they feared violence. Who feared violence?"1) The city councilmen2) The demonstrators

And:

- "The city councilmen refused the demonstrators a permit because they advocated violence. Who advocated violence?"1) The city councilmen2) The demonstrators

Most human beings can easily answer these questions. We use our common sense to figure out what "they" is supposed to be referring to in each case. And that common sense basically involves a combination of extensive cultural background knowledge with analytical skills. (In the first question, we can deduce that the city councilmen feared violence. In the second, the demonstrators advocated violence.)

For computers, however, these questions can be quite difficult. From a grammatical standpoint, the "they" in the sentences is technically unclear. In both questions, "they" could be either the councilmen or the demonstrators.

A computer could have access to all of Google and still not really be able to grasp that city councilmen are probably less likely to advocate violence than demonstrators. It's simply less culturally appropriate for councilmen to do so. But you're not going to find that in the dictionary under "city councilmen."

Here's some more Winograd schemas, from a growing, open collection of more than 100:

- The trophy doesn't fit into the brown suitcase because it's too [small/large]. What is too [small/large]?Answers: The suitcase/the trophy.

- Jane gave Joan candy because she [was/wasn't] hungry. Who [was/wasn't] hungry?Answers: Joan/Jane.

- The woman held the girl against her [chest/will]. Whose [chest/will]?Answers: The woman's/the girl's

2: The shift to mobile devices and on mobile to apps. Distribution

3: New business models for news. Parsing & distribution

4: Analytics in news production. Distribution

5: The “product” focus in news companies. All three (but still traditional outputs as above)

6: Interaction design and improving user experience (UX). Distribution

7: Data journalism. Parsing

8: Continuous improvement in content management systems and thus in work flow. Parsing

9: Structured data. Parsing

10: Personalization in news products. Distribution

11: Transparency and trust. Distribution

12: User generated content. Gathering (and was my area of speciality at Storyful)

13: Automation and “robot journalism. All three

14: Creating an agile culture in newsrooms. Improvement in processes of all three

15: The personal franchise model in news. Alll three

16: News verticals and niche journalism. Still within existing paradigm

17: The future of context and explainer journalism. Parsing & distribution

18: “News as a service.” A product of sorts, but within current paradigm.

Murdoch –

THAT is it.

Polarized media, direct-from-source spin and just plain false information are increasing.

1. the corrective post, fixing wrong information from elsewhere:http://talkingpointsmemo.com/edblog/breitbart-issues-best-correction-since-forever

2. the political fact check, often difficult to report and write and fraught with consequences:

http://www.wral.com/fact-check-a-500-million-education-that-isn-t-quite-what-it-seems/13935936/

3. the full site with a mission to correct false information:

http://www.emergent.info/

Part of this relates to product and presentation – a 140-character tweet can sometimes serve as a fact check or conversely serve as a way to obfuscate or cherry pick and spin numbers. Knowing what format works best and most efficiently to find and convey truth is a factor – as HF notes in his efforts to sift through a bunch of tweets.

journalism is not only about content, it’s about sales (to advertisers or readers) and distribution.

- Segmentation of digital journalism in different products (Like The New York Times tried to do with NYT Opinion, which didn’t work, and NYT Now, which apparently worked). And consequently:

-Gaming

-Internet of Things

-Streaming media

-Sensory media (e.g., texting smells)

-Wearable technology

http://goo.gl/FOcDOv